Second Meaningful Content: the Worst Performance Metric

Personalized and A/B content tests are common features of many websites today because they can help teams improve conversion and engagement. In recent years, however, we’ve seen many sites move these dynamic content workflows from the server-side to the client-side to make it easier for marketing and UX teams to work with less developer support. Unfortunately, this shift to client-side JS can have a large impact on performance.

The Old Wait & Switch Gag

Permalink to 'The Old Wait & Switch Gag'To recap a typical implementation of this pattern: the server delivers usable HTML content along with the some JavaScript that will block the page from rendering while the script(s) loads and evaluates. That blocking period is the first performance antipattern of the workflow, but a more egregious one follows: once the script executes, it will remove a piece of content from the page and replace it with a new piece of content in its place. That new content might be more targeted to a given user, but the time and complexity involved in delivering it can mean long delays for users. Ironically, the opportunity to increase conversion with these tools may come at the cost of some users’ ability to use the site at all.

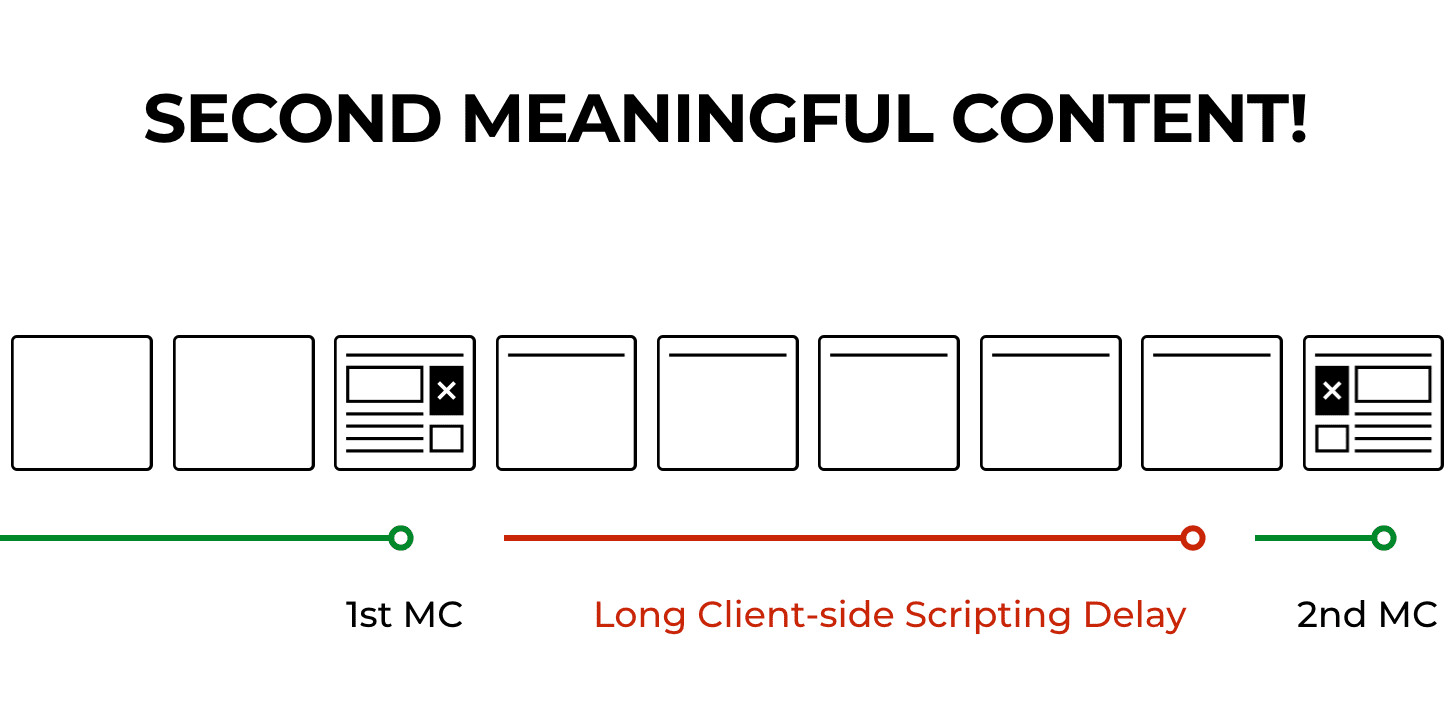

This pattern leads to such a unique effect on page load that at last week’s Perf.Now() Conference, I coined a new somewhat tongue-in-cheek performance metric called “Second Meaningful Content,” which occurs long after the handy First Meaningful Content (or Paint) metric, which would otherwise mark the time at which a page starts to appear usable.

A visual timeline of the pattern tends to look like this:

This pattern can take a formerly fast, optimized site and render it (heh, sorry) painfully slow; we’ve seen sites with pages that used to be interactive in 4 or 5 seconds on an average phone and network suddenly taking 12 seconds to even begin to look usable.

Part of the reason for this delay is that JavaScript takes a long time to process on smartphones today, even if it downloads fast. For example, the average new smartphone currently takes 2 seconds to process 200kb of compressed JavaScript, which just happens to be the size of the A/B JavaScript in use on a site I’m auditing right this moment. Another part of the delay is that sometimes these scripts will kick off additional blocking requests to the server while the user waits, introducing even more cycles of downloading and evaluating scripts.

Ways to Mitigate

Permalink to 'Ways to Mitigate'This pattern has become so commonly integrated on top of sites nowadays that it seems it’s now easier to make a fast website than it is to keep a website fast. So what can we do about it?

- Only A/B test repeat views: first we can recommend that site owners use these client-side tools in less-critical situations so that they cause less damage. For example, they could choose to not vary the content for first-time visitors, and instead just preload the scripts so that they’re cached and ready for use in subsequent page views. Of course, having the scripts cached will only help with the loading time. The time the scripts spend actually executing after they’re loaded is the more problematic and harmful delay.

- Preload the Inevitable: Using

link[rel=preload]elements in theheadof an HTML document to preload scripts that will be requested at a later time can dramatically improve loading time. Once again, this won’t change the delays incurred by evaluating and running the scripts after they download, but it can save seconds in upfront load time. - Only A/B content below the fold and (ideally) delay the scripts: Along similar lines, we could recommend that dynamic content is only used for portions of a page that are outside of the viewport when the page first loads, far down the page so that users may not see the flash of unswapped content happen. If this use case is possible, it might be worth also considering whether the blocking scripts can be referenced and loaded later as well, perhaps just before the piece of content that they drive.

- Move to server side testing: Lastly–and ideally–we can recommend that sites replace their client-side content tools with those that work on the server-side, so the hard work can take place before the content is delivered to the browser. Many personalization and A/B test toolkits offer server-side alternatives to their client-side tools, and we should push site owners to consider these tools instead.

In an upcoming followup post, I will detail an approach we’ve been experimenting with recently that gives us the benefits of a server approach with the familiarity and flexibility of doing it with JavaScript. Stay tuned!